This lawyer had a very, very bad day and may be facing some serious consequences by leaning on the AI tool ChatGPT too much too soon.

For those of you who are worrying that it will replace you and your white-collar job, we’re not there quite yet. (You, me, and many others can breathe a sigh of relief.)

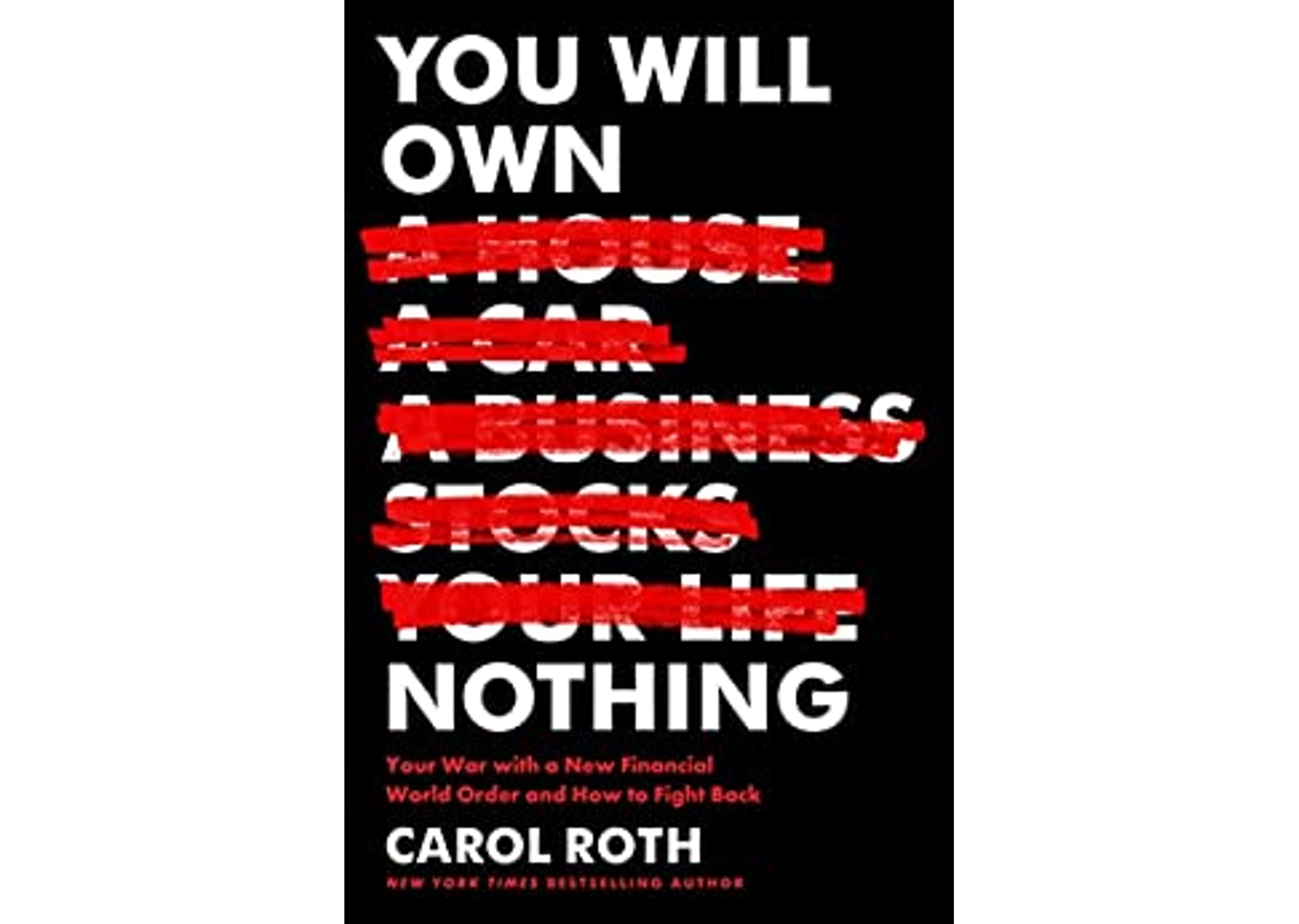

Carol posted this on LinkedIn.

I recommend reading the full article, “Here’s What Happens When Your Lawyer Uses ChatGPT” in The New York Times by Benjamin Weiser, as a cautionary tale, but here is how it starts:

The lawsuit began like so many others: A man named Roberto Mata sued the airline Avianca, saying he was injured when a metal serving cart struck his knee during a flight to Kennedy International Airport in New York.

When Avianca asked a Manhattan federal judge to toss out the case, Mr. Mata’s lawyers vehemently objected, submitting a 10-page brief that cited more than half a dozen relevant court decisions. There was Martinez v. Delta Air Lines, Zicherman v. Korean Air Lines and, of course, Varghese v. China Southern Airlines, with its learned discussion of federal law and “the tolling effect of the automatic stay on a statute of limitations.”

There was just one hitch: No one — not the airline’s lawyers, not even the judge himself — could find the decisions or the quotations cited and summarized in the brief.

That was because ChatGPT had invented everything.

If you are asking whether the author of the brief asked ChatGPT if the information was correct, they did.

So, now you are scratching your head and wondering how it could happen. From the article:

ChatGPT generates realistic responses by making guesses about which fragments of text should follow other sequences, based on a statistical model that has ingested billions of examples of text pulled from all over the internet. In Mr. Mata’s case, the program appears to have discerned the labyrinthine framework of a written legal argument, but has populated it with names and facts from a bouillabaisse of existing cases.

Oops!

I will continue to post this type of content because you need to know what AI can and cannot do right now. This technology needs guardrails in place, but it may already be too late for that.

Be vigilant and stay safe, folks.