For the past few months it seems like I couldn’t look at LinkedIn without seeing something about ChatGPT or other AI writing tools. I spent some of my favorite years in corporate in risk management practices at the big consulting firms, so part of me was looking for the inevitable IP and legal ramifications of these incredible tools.

Please note: I do not believe these are going away, but with power does come responsibility.

I want to give a shoutout to Josh Bernoff for linking to this article by Michelle Garrett, “The Realities of Using ChatGPT to Write for You – What to Consider When It Comes to Legalities, Reputation, Search and Originality,” on LinkedIn. (If you are a writer, go follow Josh Bernoff right now. He posts the best stuff.)

Here is why Garrett was inspired to research this topic, and why you as someone who either writes content or hires people to write content should care:

“What grabbed my attention was a post by someone who had hired a freelance writer to create content – only to find that what the writer had delivered was, in fact, generated by the chatbot.

Alarm bells started going off in my head – how will a client know if a writer is actually writing the content they hired them to write – or using ChatGPT to write for them? Will writing content yourself versus allowing a bot to write it now be a differentiator? What about plagiarism and copyright infringement? Can clients get into legal trouble if they accept content written by a chatbot and publish it as their own? What happens when a consultant feeds proprietary client information into the chatbot?”

My immediate response to reading this was, “Ack!”

As it turns out, this is incredibly complex. AI is trained on information available on the internet. As you have no doubt personally experienced, information on the internet is sometimes correct and often incomplete or incorrect. AI may or may not look for that.

And it may or may not include sources so you can check it. Remember that old “garbage in, garbage out” adage? It definitely applies here.

And what about paying a contractor to create content for you? They may be incorporating things that have copyright issues because, once again, AI may not flag that. This could lead to legal risk and reputational risk.

And then there is the risk that you or your writer may accidentally feed proprietary information into an AI program to generate ideas or content. That is a giant can of worms, because depending on the program’s terms of service, which very few people read, you may have given that program the right to share the proprietary information with all of that program’s users. Ooops!

I strongly recommend you read the whole article to understand these and other potential risks.

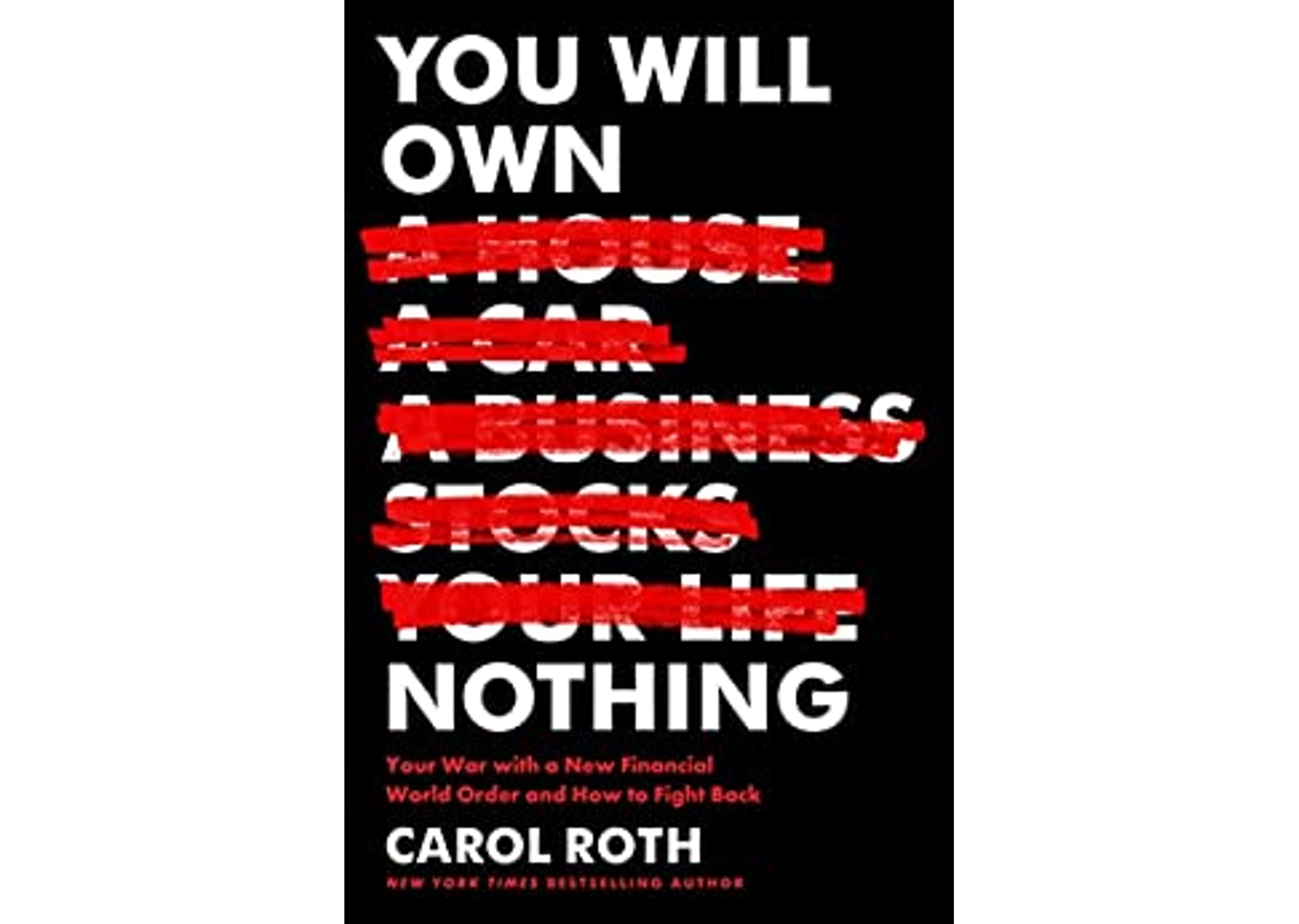

To date I have not, but I could easily use an AI program to help me come up with ideas, first drafts, or even complete posts for this blog. Would anyone notice? I don’t know. I have hesitated to do this for exactly the reasons above. I don’t want to put Carol at risk.

Other writer colleagues have shared that they use AI programs to help them all day every day.

In the end, you will need to make the decision for yourself.

Photo by Nick Fewings on Unsplash